ResearchMy research focus is multimodal deep learning for sequential data. I am also interested in Vision Language Models, Large Language Models, and their applications in sequential data such as videos, sensor data (time series) and text. |

News and Updates

|

Publications |

|

LETS Forecast: Learning Embedology for Time Series Forecasting

Abrar Majeedi, Viswanatha Reddy Gajjala, Satya Sai Srinath Namburi GNVV, Nada Elkordi, Yin Li International Conference on Machine Learning (ICML) 2025 Project Page / Code / Paper Link A novel time series forecasting method that combines principles from nonlinear dynamical systems with deep learning to model latent temporal structure for accurate forecasts. |

|

|

RICA2: Rubric-Informed, Calibrated Assessment of Actions

Abrar Majeedi, Viswanatha Reddy Gajjala, Satya Sai Srinath Namburi GNVV, Yin Li, European Conference on Computer Vision (ECCV) 2024 Project page / Code / Paper Link Action quality assessment in videos by incorporating human designed scoring rubrics while providing calibrated uncertainty estimates. |

|

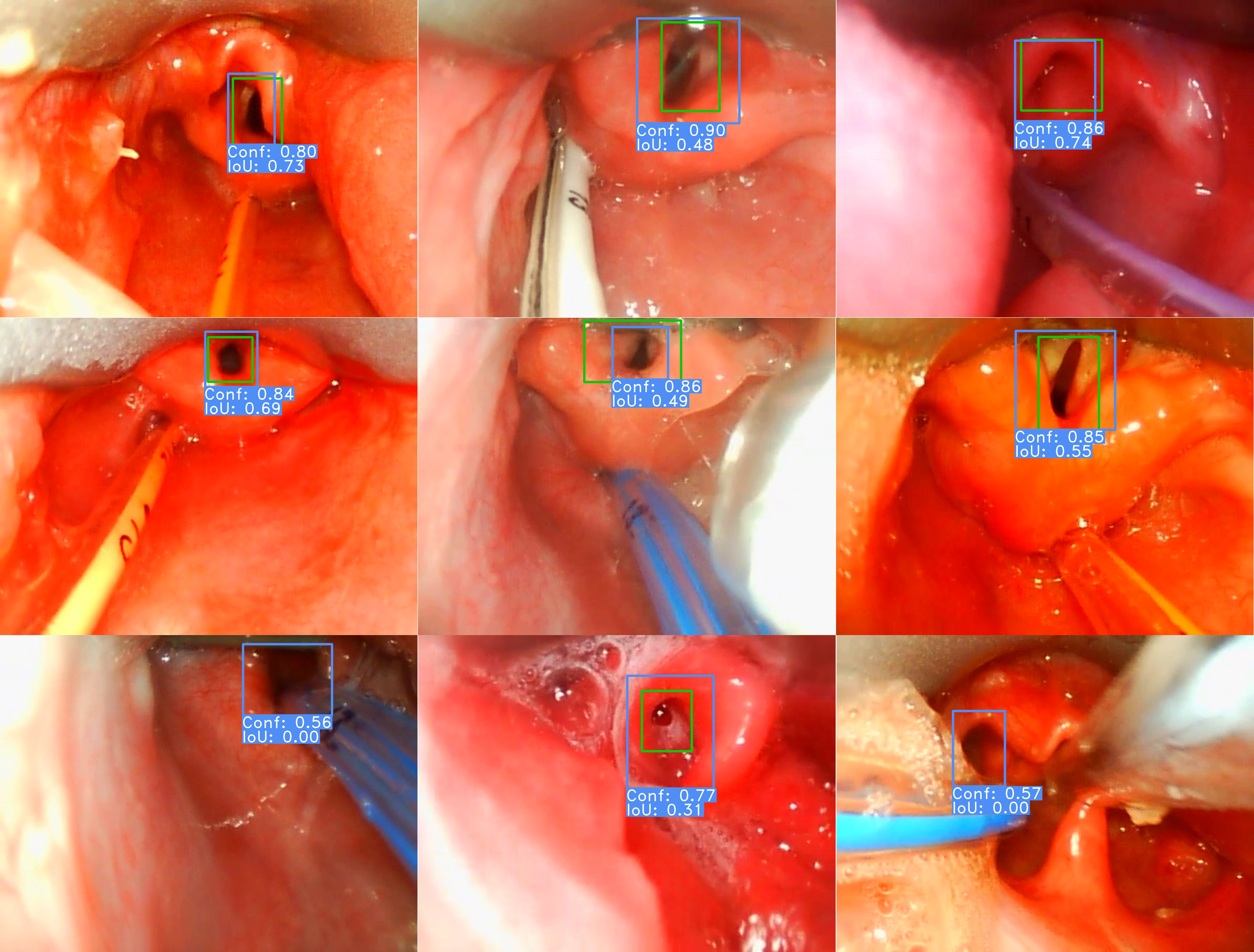

Glottic Opening Detection using Deep Learning for Neonatal Intubation with Video Laryngoscopy

Abrar Majeedi, Patrick Peebles, Yin Li, Ryan McAdams Nature - Journal of Perinatology, Nov 2024 Paper link Auotmatically detect the glottic opening during neonatal intubation using video laryngoscopy to improve intubation outcomes. |

|

|

Deep learning to quantify care manipulation activities in neonatal intensive care units

Abrar Majeedi, Ryan McAdams, Ravneet Kaur, Shubham Gupta, Harpreet Singh, Yin Li npj Digital Medicine, June 2024 Paper Link / Code Automatically quantify care manipulation activities in neonatal intensive care units (NICUs), while integrating physiological signal data to monitor neonatal stress in NICUs. |

|

|

Full Reference Video Quality Assessment for Machine Learning-Based Video Codecs

Abrar Majeedi, Babak Naderi, Yasaman Hosseinkashi, Juhee Cho, Ruben Alvarez Martinez, Ross Cutler Preprint, 2022 Paper / Code Assess perceptual quality of videos encoded by Machine Learning-based Video Codecs. |

|

|

Detecting Egocentric Actions with ActionFormer

Chenlin Zhang, Lin Sui, Abrar Majeedi, Viswantha Reddy Gajjala, Yin Li EPIC @ CVPR Workshop 2022 Report / Code Won 2nd place in the Egocentric action detection challenge |

|

|